Now that a proper TV stand is in place, I thought it time to revisit the audio setup. I say this because the stand slightly modified the arrangement of some speakers, and music sounded just different enough that I couldn’t let it go. So when the girls went out grocery shopping, I used the rare moment of silence to begin a calibration.

In theory, the measurable amplitudes of a sampling of sine waves across the spectrum of 20Hz to 20kHz should register a similar decibel score. In practice, the physical limitations of speaker drivers prevents this, but settings can be tweaked to reduce the disparity. I lack any sort of professional calibration equipment, but in reality a good sound setting is merely defined as preference by the listener, so I opted to use what I had on hand and simply settle for a mere approximation.

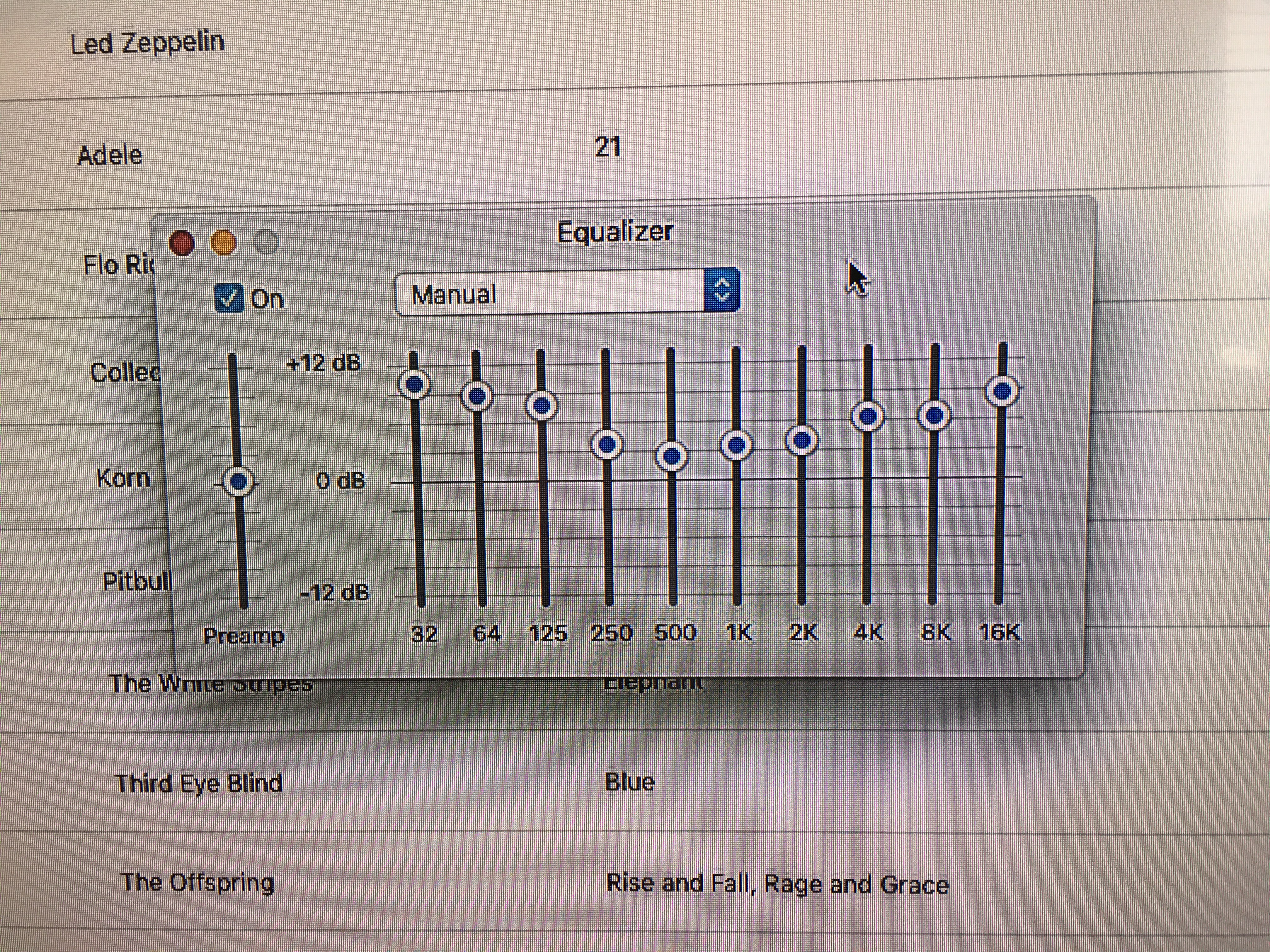

iTunes has, through whatever typical obscure Apple methodology, determined the above frequencies to be focal points in the human range of hearing. I’m sure there’s some kind of math behind it, but I didn’t care enough to research it.

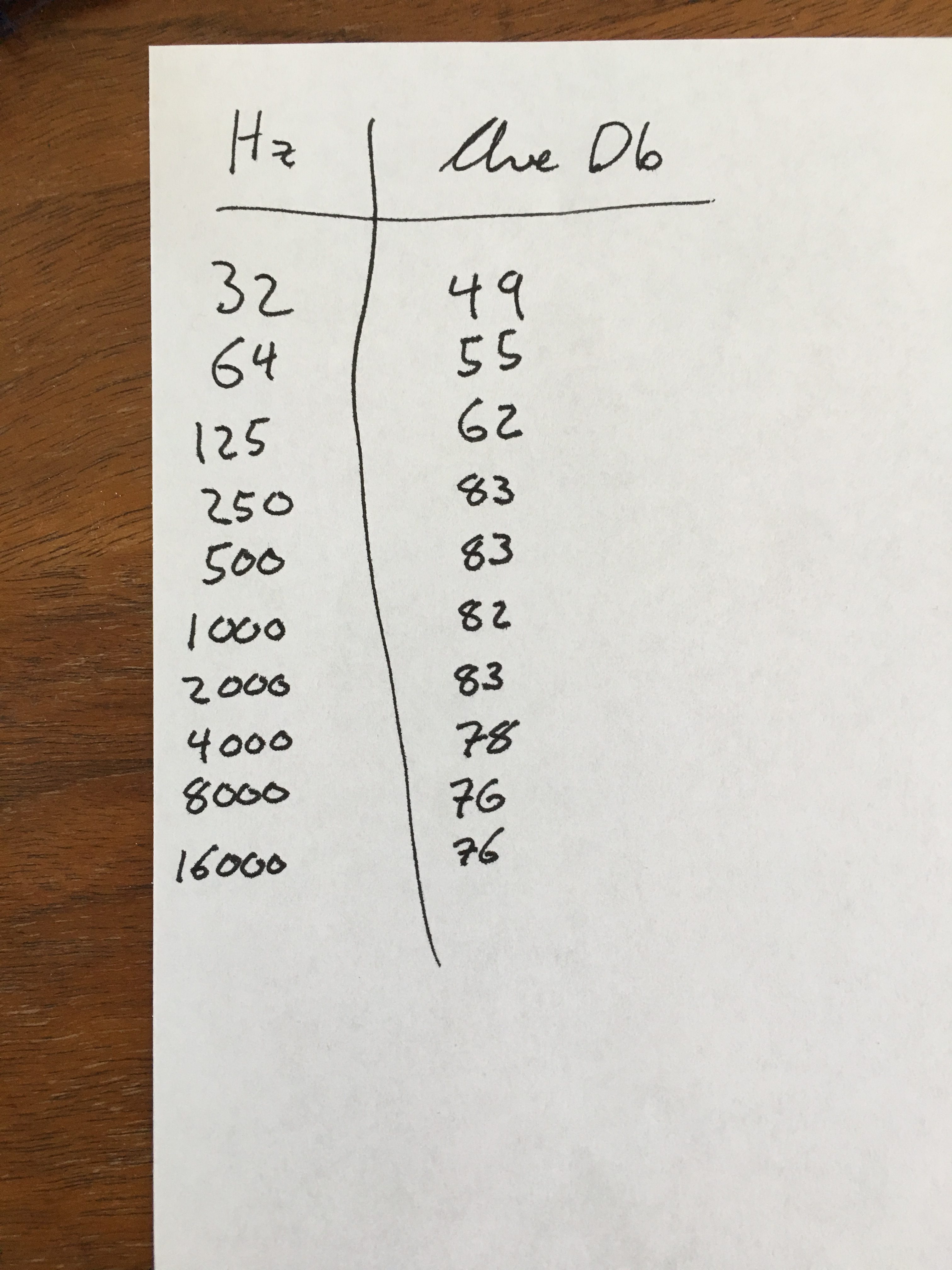

So, I YouTubed each of these frequencies for a test tone, played the tone, then measured the decibel level with a free sound meter app on my phone. I’m not sure how accurate this method was, but I aggregated the figures as guidelines (chasing the dogs out of the room in the process as they did not appreciate the test tones above 1kHz):

I noticed an amplitude dropoff at the high and low ranges, which I found satisfying in that I had already adjusted the levels to compensate, based on my hearing alone. I made some minor adjustments.

So my hearing may be getting worse, but I can still identify amplitude variations across the audible spectrum. At least now when I’m forced to watch M*A*S*H reruns, I can at better appreciate the audio balance.

–Simon